In the CHI community we have the notion of non-archival publications. Some years back this concept may have been good but I find it harder and harder to understand. Over the month I had several people about this concept and in Paris I discussed it with several colleagues, who are involved in SIGCHI. Here are some of the thoughts – hopefully as a starting point for further discussion.

First a short introduction to the concept of Non-archival publications: non-archival publications in the “CHI world” are papers that are published and shown at the conference, but that must not be held against a later publication. In essence these papers are considered as not-published when reviewing an extended version of the paper. A typical example is to publish a work in progress (WIP) paper in one year showing outlining the concept, the research path you have started to take, and some initial findings. Than in the following year you publish a full paper that includes all the data and a solid analysis. In principles this a great way of doing research, getting feedback from the community on the way, and publishing then larger piece of work. Elba in our group did this very well: a WIP in CHI2010 [1] and then the full paper in CHI2011 [2]. This shows there is value to it and understand the motivation why the concept of non-archival publications was created.

Over the last years however I have seen a number points that highlight that the concept of non-archival publication is everything but not straightforward to deal with. The following points are from experience in my group over the last years.

1) Non-archival publications are in fact archival. Once you assign a document a DOI and include them in a digital library (DL) these publications are archived. The purpose of a digital library and the DOI is that things will live on, even if the people’s websites are gone. The point that the authors keep the copyright and can publish it again does not chance the fact that the paper is archived. It is hard to explain someone from another community (e.g. during a TPC meeting of Percom) that there is a paper which has a DOI, is in the ACM DL, it counts into the download and citation statistics of the author in the ACM DL and is indexed by Google Scholar, and yet it has to be considered as not published, when assessing a new publication.

2) Non-archival publications may be the only publication on a topic. Sometimes people have a really cool idea and some initial work and they publish it as WIP (non-archival). Then over the years the authors do not get around to write the full paper, e.g. because they did not get the funding to do it. Hence the non-archival work in progress paper is the only publication that the authors have about this work. As the believe it is interesting they and probably other people will reference this work – but referencing something that is non-archival is questionable, but not in this case as in fact is archival as it is in the DL with DOI. Here is an example from our own experience: Sometimes back we had in our view a cool idea to chance the way smart objects can be created [3] – we did initial prototypes but did not have funding for the full project (we still work on getting it). The WIP is the only “paper” we “published” on it and hence we keep it in our CVs.

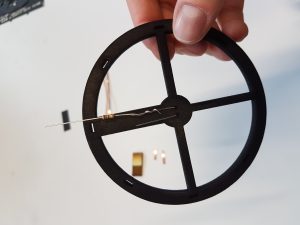

3) Chances in authorship between non-archival and full paper. Academia is a dynamic environment and hence things are started in one place and continued somewhere else. In this process the people doing the research are very likely to chance. To account for this we typically include a reference to the first non-archival publication to acknowledge the earlier contributions made. We have one example were we had an idea for navigation system that we explored in Munich very superficial and wrote up a WIP [4]. Enrcio then moved on to Lancaster and did a serious system and study – and as he is a nice person he references the WIP to acknowledged that some other people were involved in initial phase of creating the idea [5]. And by doing so he increased Antonio’s and my citation count, as we list the WIP paper on our Google scholar page.

4) Non-archival publication are part of people’s citation count and h-index. When assessing the performance of individuals academia seems to move more and more towards “measurable” data, hence we see that citation counts and h-index may play a role. I have one “publication”, it was a poster at ISWC 2000 about a wearable RFID reader [6], that has 50+ citations and it hence impacts my h-index (on Google). For ISWC2000 posters were real publications in the IEEE DL, but this could have equally been a WIP at CHI. Hence there is the question: should non-archival material be part of the quantitative assessment of impact?

I have some further hypothetical points (inspired by the real world) that highlight some of the issues I see with the concept of non-archival publications:

Scenario A) Researcher X has great idea for a new device and publishes a non-archival paper including the idea, details about the way the implementation, some initial results, and a plan how she will do the study at Conf201X. She has a clear plan to complete the study and publish the full paper at Conf201X+1. She falls short in time due to be ill for a few months and manages to submit only a low quality full paper. Researcher Y talks to Research X at the conference is impressed and reads the non-archival version of the paper. He likes it and has some funds available hence he decides to do a follow-up building on this research. He hires 3 interns for the summer, gets 20 of the devices build, does a great study, and submits a perfect paper. The paper of Researcher Y is accepted and the paper of researcher X is not. My feeling would be that in this case Y should at least reference the non-archival paper of X, hence non-archival papers should be seen as previous work.

Scenario B) A researcher starts a project, creates a systems and does an initial qualitative study. He publishes the results as non-archival paper (e.g. WIP) including a description of the quantitative study to be conducted. Over the next month he does the quantitative study – it does not provide new insights, but confirms the initial findings. He decides to write a 4 page note in two column format that is over 95% the same text as the 6 page previously published WIP, just with the addition, of one paragraph that a qualitative study was conducted which confirmed the results. In this case having both papers in the digital library feels not write. The obvious solution would be to replace the work in progress by the note.

Here is a proposal how non-archival publication could be replaced:

- Everything that is published in the (ACM) digital library and which has an DOI is considered an archival publication (as they are in fact are)

- Publications carry labels such as WIP, Demo, Note, Full paper, etc.

- Scientific communities can decided to have certain venues that can be evolutionary, e.g. for SIGCHI this would be to my current understanding WIP, Interactivity, and workshops.

- Evolutionary publications can be replaced by “better” publications by the authors, e.g. an author of a WIP can replace this WIP in the next year with a Full paper or a Note, the DOI stays the same

- To ensure accountability (with regard to the DOI) the replaced version remain in the appendix of the new version, e.g. the full paper has then as appendix the WIP it replaces

- If evolutionary publications are not replaced by the author they stay as they are and other people have to consider these as previous work

- Citations accumulated along the evolutionary path are accumulated on the latest version include.

- Authors can decide (e.g. when the project team changes, when the results a contradictory to the initial publication, when significant parts of the system chance, when authors chance) to not go the evolutionary path. In this case they are measured against the state of the art, which includes their own work.

In the CHI context this could be as follows: you have a WIP in year X, in year X+1 you decided to replace the WIP by the accepted Full paper that extended this WIP, in year X+3 you decided to extend Full Paper with your accepted ToCHI paper. When people download the ToCHI paper they will have the full conference paper and the WIP in the appendix. The citations that are done on the WIP and on the full paper are included in the citations of the journal paper. In a case where you combine conference several papers into a consolidated journal paper, you would create a new instance not replacing any of it or you may replace one of the conference papers.

This approach does not solve all the problems but I hope it is a starting point for a new discussion.

Just claiming stuff that is in the ACM DL and has a DOI is not archival feels like we create our own little universe in which we decide that gravity is not relevant…

UPDATE – Discussion in facebook (2012-12-11):

—

Comment by Alan Dix:

It seems there are three separate notions of ‘archival’:

(i) doesn’t count as prior publication for future, say, journals

(ii) is recorded in some stable way to allow clear citation

(iii) meets some minimum level against some set of quality criteria

In the days before people treated conferences as if they were journal publications. It was common to have major publications in university or industrial lab ‘internal’ report series. These were often cited, and if they made it to journals, it was years later. The institutions distributed and maintained the repositories, hence they were archival by defn (ii). Conference and workshop papers likewise were and have always been cited widely whether or not they were officially declared ‘archival’.

Conference papers, even if from prestigious conferences such as CHI are NOT usually archival by defn (iii) – or at least cannot be guaranteed to – as it is not a minimal standard in all criteria, more a balance between criteria, if something is really novel and important, but maybe not 100% solid it would and *should* be conference publishable, but shuld not be jiurnal publishable until *everything* hits minimum standard may not be fantastic against any though – faultless != best

As for (i) that is about venue, politics and random rubbish rules. For a conference the issue is “is there enough new for the delegates to see?” (unless the conference is pretending to be ‘archival’ meaning (ii), but we should ignore such disingenuous venues).

For a journal, it would quite valid to publish a paper absolutely identical (copyright issues withstanding) one that had previously been published (and is archival by (i)) as its job is to ensure (ii).

This was common in the past with internal reports and common again now with eprints services providing pre-prints during submission as well as pre-publication.

In a web world *all* conference contributions are archival by defn (i) and *none* are by def. (ii).

Conferences are news channels, journals and quality agencies … and when the two get confused the discipline is in crisis.

Comment by Eva Hornecker

Reading Alan’s response I am reminded I used to learn the distinction between ‘grey’ literature (citable, e.g. technical reports) and white/black (not sure anymore which is which) that is either informal and not archived (e.g. workshop position papers) or fully published and peer reviewed. Difference with WiPs etc. is they are peer reviewed (although only gently)

Comment by Rod Murray-Smith

I guess there is also a question about whether WIPs are really still being used as “works in progress”, or more frequently as a way to attend the conference despite the paper not being lucky enough to get in. Do we have any stats on % of papers which are recycled from the main conference, as by submitting them to that, authors are claiming that these are ready for archival. Similar issues for many workshop papers.

Comment by Alan Dix

Of course workshop position papers are often web ‘archived’ (my criteria (ii)), and some even heavily refereed … indeed many people would prefer a CHI workshop paper on their CV than a more heavily refereed conference paper elsewhere … I guess about brand, like a Nike holdall.

There is another orthogonal issue too which is about the level and surety of the process, which is pretty independent of the clarity and kind of criteria. You may have a poor quality journal that is using similar criteria to a better journal, but simply having a lower bar and perhaps, because of quality of reviewing, lower level of confidence. I’m sure both Fiat and Ferrari have quality control, just the level different.

In some ways I am happier with low quality journals that you now are low quality (and therefore readers apply caveat emptor) than high quality conferences, where it is easy for readers to assume high quality = all OK.

This is why I always feel that all reviewing processes should have a non-blind point, as a paper with a fantastic idea, but major methodological flaw, is fine if produced by an unknown person in and unknown institution (as readers will take it with a inch of salt), but should be rejected if from a major name in the field (as it is more liely to be taken as a pattern of how to do it by readers).

Alternatively anonymous refereeing + anonymous publication

… and none of this is about the absolute value, significance, etc. of the work, quality control is about stopping the bad apples, not making good ones.

Comment by Susanne Boll

I fully agree. Coming from the Multimedia community initially, I never understood this concept. SIGMM and the annual conferences will publish anything that undergoes a peer-review. Full papers are the most prestiguous one, short papers (4 pages) are for smaller contributions or more focused work. Workshops are THE platform to start new topics in the field and of course the work is peer-reviewed and published. For example, the Multimedia Information Retrieval run for several years and gained more and more interest in the field until it finally became an own conference.

I also found it strange this year that I reviewed a full paper for one year but had a deja vu as the work was already shown in the interactivity session the year before. This not only makes it difficult to judge novelty but also is contradictory to the blind review. Maybe have a look how other SIG conferences such as Multimedia handle it.

Comment Amanda Marisa Williams

I’m intrigued — no time at the moment but it’s bookmarked for later today. Def wanna have this conversation with some CHI veterans since I have some concerns about the archival/non-archival distinction as well.

Comment by Bo Begole

I think the crux of the issue is simply that we shouldn’t use the term “archival” at all – as you point out, anything published on the DL with a DOI is “archived”. It’s an archaic term. More properly, we should use accurate terms to describe the level of review. CHI uses the terms “refereed” “juried” and “curated” for different levels http://chi2013.acm.org/authors/call-for-participation/#refereed which map to ACM categories of CHI refereed is roughly equiv to “refereed, formally reviewed” CHI juried is equiv to “reviewed” and CHI curated is roughly equiv to “unreviewed”. CHI also uses the ACM criteria regarding republishability of content

Comment by Chris Schmandt

What Bo says is good, but this distinction is lost on the masses. It’s a “CHI paper” no matter what venue. And even in the old day when we had the separate “abstracts” volume, only the few in the know could recognize the difference between the short …

—

References

[1] Elba del Carmen Valderrama Bahamóndez and Albrecht Schmidt. 2010. A survey to assess the potential of mobile phones as a learning platform for panama. In CHI ’10 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’10). ACM, New York, NY, USA, 3667-3672. DOI=10.1145/1753846.1754036 http://doi.acm.org/10.1145/1753846.1754036

[2] Elba del Carmen Valderrama Bahamondez, Christian Winkler, and Albrecht Schmidt. 2011. Utilizing multimedia capabilities of mobile phones to support teaching in schools in rural panama. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’11). ACM, New York, NY, USA, 935-944. DOI=10.1145/1978942.1979081 http://doi.acm.org/10.1145/1978942.1979081

[3] Tanja Doering, Bastian Pfleging, Christian Kray, and Albrecht Schmidt. 2010. Design by physical composition for complex tangible user interfaces. In CHI ’10 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’10). ACM, New York, NY, USA, 3541-3546. DOI=10.1145/1753846.1754015 http://doi.acm.org/10.1145/1753846.1754015

[4] Enrico Rukzio, Albrecht Schmidt, and Antonio Krüger. 2005. The rotating compass: a novel interaction technique for mobile navigation. In CHI ’05 Extended Abstracts on Human Factors in Computing Systems (CHI EA ’05). ACM, New York, NY, USA, 1761-1764. DOI=10.1145/1056808.1057016 http://doi.acm.org/10.1145/1056808.1057016

[5] Enrico Rukzio, Michael Müller, and Robert Hardy. 2009. Design, implementation and evaluation of a novel public display for pedestrian navigation: the rotating compass. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’09). ACM, New York, NY, USA, 113-122. DOI=10.1145/1518701.1518722 http://doi.acm.org/10.1145/1518701.1518722

[6] Albrecht Schmidt, Hans-W. Gellersen, and Christian Merz. 2000. Enabling Implicit Human Computer Interaction: A Wearable RFID-Tag Reader. In Proceedings of the 4th IEEE International Symposium on Wearable Computers (ISWC ’00). IEEE Computer Society, Washington, DC, USA, 193-194.

Die Abteilung für Mensch-Computer Interaktion der Universität Stuttgart, das

Die Abteilung für Mensch-Computer Interaktion der Universität Stuttgart, das